1. Introduction

Style transfer: Synthesizing an image with content similar to a given image and style similar to another.

1.1 Motivation

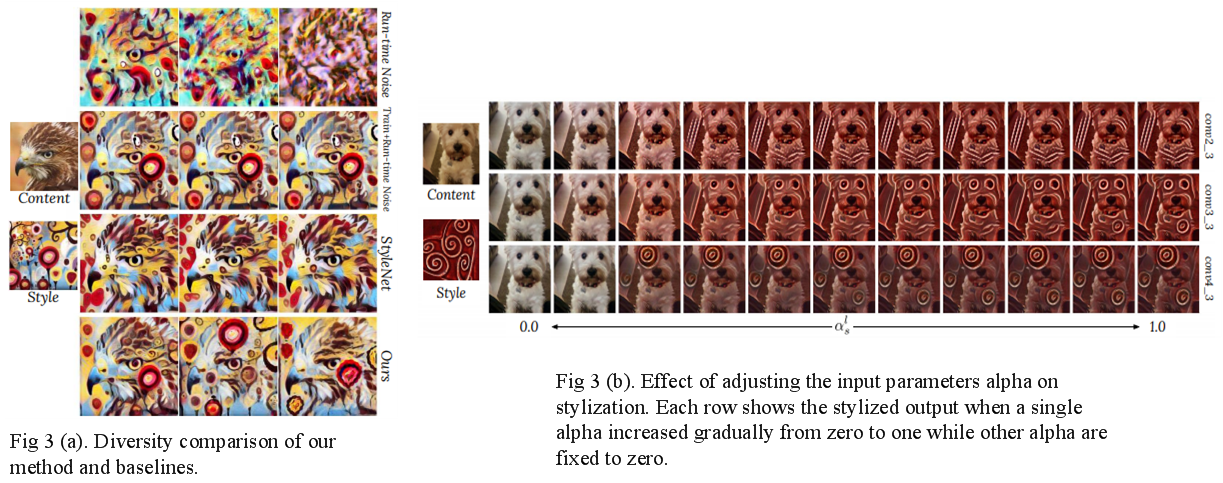

There are two main problems in style transfer on the existing methods. (i) The first weak point is that they generate only one stylization for a given content/style pair. (ii) One other issue of them is their high sensitivity to the hyper-parameters.

1.2 Goal

To solve these problems, the authors provide a novel mechanism that allows adjustment of crucial hyper-parameters, after the training and in real-time, through a set of manually adjustable parameters.

2. Background

2.1 Style transfer using deep networks

Style transfer can be formulated as generating a stylized image \(p\) whose content is similar to a given content image \(c\) and its style is close to another given style image \(s\).

\[

p= \Psi (c,s)

\]

The similarity in style can be vaguely defined as sharing the same spatial statistics in low-level features of a network (use VGG-16 in this paper), while similarity in content is roughly having a close Eculidean distance in high-level features.

The main idea is that the features obtained by the network contain information about the content of the input image while the correlation between these features represents its style.

In order to increase the similarity between two images, minimize the following distances between their extracted features:

\[

\begin{array}{l} L^l_c (p) = \Vert \phi^l (p) - \phi^l (s) \Vert^2_2 \quad \cdots Eq .(1) \cr L^l_c (p) = \Vert G(\phi^l (p)) - G(\phi^l (s)) \Vert^2_F \quad \cdots Eq .(2) \end{array}

\]

where

- \( \phi^l (x) \): Activation of a pre-trained network at layer \(l\).

- \(x\): Given the input image.

- \(L^l_c (p), ; L^l_c (p) \): Content and style loss at layer \(l \) respectively.

- \( G(\phi^l (s)) \): Gram matrix associated with \( \phi^l (p) \).

- Gram matrix \(G^l_{ij} = \sum_k \phi^l_{ik}\phi^l_{jk} \): Variance of RGB between image textures.

The total loss is calculated as a weighted sum of losses a set of content layers \(C\) and style layers \(S\):

\[

L_c (p)= \sum_{l \in C } w^l_c L^l_c (p), \; L_s (p) = \sum_{l \in S } w^l_s L^l_s(p) \quad \cdots Eq .(3)

\]

where \(w^l_c, w^l_s\) are hyper-parameters to adjust the contribution of each layer to the loss. The problem is that these hyper-parameters have to be manually fine-tuned through try and error.

Finally, the objective of style transfer can be defined as:

\[

min_p (L_c (p) + L_s (p)) \quad \cdots Eq .(4)

\]

2.2 Real-time feed-forward style transfer

We can solve the objective in Eq. (4) using the iterative method but it can be very slow and has to be repeated for any given input.

A much faster method is to directly train a deep network \(T\) which maps a given content image \(c\) to a stylized image \(p\). \(T\) is a feed-forward CNN (parameterized by \(\theta\)) with residual connections between down-sampling and up-sampling layers and is trained on content images like as:

\[

min_\theta (L_c (T(c))+L_s (T(c))) \quad \cdots Eq .(5)

\]

The second problem from this is that this generates only one stylization for a pair of style and content images.

3. Proposed Method

To address the two issues that are mentioned above, the authors condition the generated stylized image on additional input parameters where each parameter controls the share of the loss from a corresponding layer.

As for figural style, they enable the users to adjust \(w^l_c, w^l_s \) without retraining the model by replacing them with input parameters and conditioning the generated style images on these parameters:

\[

p = \Phi (c,s,\alpha_c \alpha_s)

\]

\( \alpha_c \) and \( \alpha_s \) are vectors of parameters where each element corresponds to a different layer in content layers \(C\) and style layers \( S\) respectively. \( \alpha^l_c \) and \( \alpha^l_s \) replace the hyper-parameters \( w^l_c \) and \( w^l_s \):

\[

L_c (p)= \sum_{ l \in C } \alpha^l_c L^l_c (p), \; L_s (p) = \sum_{ l \in S } \alpha^l_s L^l_s(p) \quad \cdots Eq .(6)

\]

To learn the effect of \( \alpha_c \) and \( \alpha_s \) on the objective, the authors use a technique called conditional instance normalization.

This method transforms the activations of a layer \( x \) in the \(T\) to a normalized activation \( z \) which is conditioned on additional inputs \( \alpha_= [ \alpha_c , \alpha_s] \):

\[

z= \gamma_{ \alpha } ( \frac{x- \mu}{ \sigma }) + \beta_{ \alpha } \quad \cdots Eq .(7)

\]

where \( \mu \) and \( \sigma \) are mean and standard deviation of activations at layer \( x \) across spatial axes and \( \gamma_{ alpha } \) , \( \beta_{ \alpha } \) are the trained mean and standard deviation this transformation. These parameters can be approximated using a second neural network (in here, MLP) which will be trained end-to-end with \( T \):

\[

\gamma_{ \alpha } , \beta_{ \alpha } = \Lambda ( \alpha_c, \alpha_s) \quad \cdots Eq .(8)

\]

Since \( L^l \) can be different in scale, do normalize them using their exponential moving average as a normalizing factor, i.e. each \( L^l \) will be normalized to:

\[

L^l (p) = \frac{ \sum_\{ i \in C \cup S \} \bar{L^i} (p) }{\bar{L^l} (p)} \quad \cdots Eq .(9)

\]

where \( \bar{L^l} (p) \) is the exponential moving average of \( L^l (p) \).

4. Experiment setting

They trained \( T \) and \( \Lambda \) jointly by sampling random values for \( \alpha \) from \(U(0,1) \). And they used ImageNet as content images while using paintings from Kaggle Painter by Numbers and textures from Descibable Texture Dataset as style images for training.

Similar to previous approaches, they used the last feature set of conv3 as content layer \(C\) and used the last feature set of conv2, conv3, conv4 layers from VGG-19 network as style layers \(S\). Since there is only one content layer, they fix \( \alpha_c =1 \).

5. Experiment

Reference

Babaeizadeh, Mohammad, and Golnaz Ghiasi. "Adjustable real-time style transfer." arXiv preprint arXiv:1811.08560 (2018).

'AI paper review > Mobile-friendly' 카테고리의 다른 글

| [MobileOne] An Improved One millisecond Mobile Backbone 논문 리뷰 (0) | 2022.06.25 |

|---|---|

| EfficientFormer: Vision Transformers at MobileNet Speed 논문 리뷰 (1) | 2022.06.08 |

| Lite Pose 논문 리뷰 (0) | 2022.04.18 |

| MobileViT 논문 리뷰 (0) | 2022.03.28 |

| EfficientNetv2 논문 리뷰 (0) | 2022.03.24 |