AI paper review/Model Compression

Learning Features with Parameter-free Layers 논문 리뷰

이번에는 ICLR 2022에 accept된 Naver clova 논문인 Learning Features with Parameter-free Layers 을 리뷰하도록 하겠습니다. 해당 논문은 accuracy성능은 유지하면서 latency을 상당히 줄일 수 있는 (operation)layer를 제안하는 데 기여하였습니다. 1. Introduction 기존의 많은 논문들이 efficient한 operation 또는 layer들을 제안하였습니다. 여기서 efficient의 의미는 accuracy성능은 향상시키거나 유지하면서 적은 parameter와 낮은 latency를 도달할 수 있다라는 것입니다. (기존의 efficient한 operation 또는 layer는 뒤에서 더 자세히 설명드리겠습니다.) 해당 논문..

Learning Low-Rank Approximation for CNNs

1. Introduction Filter Decomposition (FD): Decomposing a weight tensor into multiple tensors to be multiplied in a consecutive manner and then getting a compressed model. 1.1 Motivation Well-known low-rank approximation (i.e. FD) methods, such as Tucker or CP decomposition, result in degraded model accuracy because decomposed layers hinder training convergence. 1.2 Goal To tackle this problem, t..

Few Sample Knowledge Distillation for Efficient Network Compression

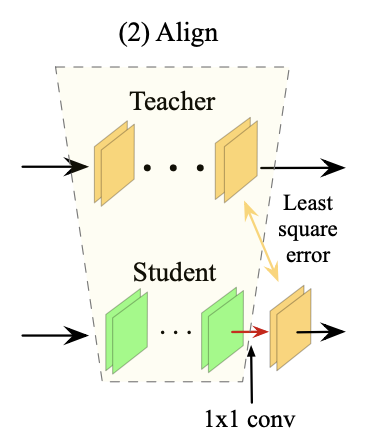

1. Introduction Knowledge distillation: Dealing with the problem of training a smaller model (Student) from a high capacity source model (Teacher) so as to retain most of its performance. 1.1 Motivation The compression methods such as pruning and filter decomposition require fine-tuning to recover performance. However, fine-tuning suffers from the requirement of a large training set and the time..

Knowledge Distillation via Softmax Regression Representation Learning

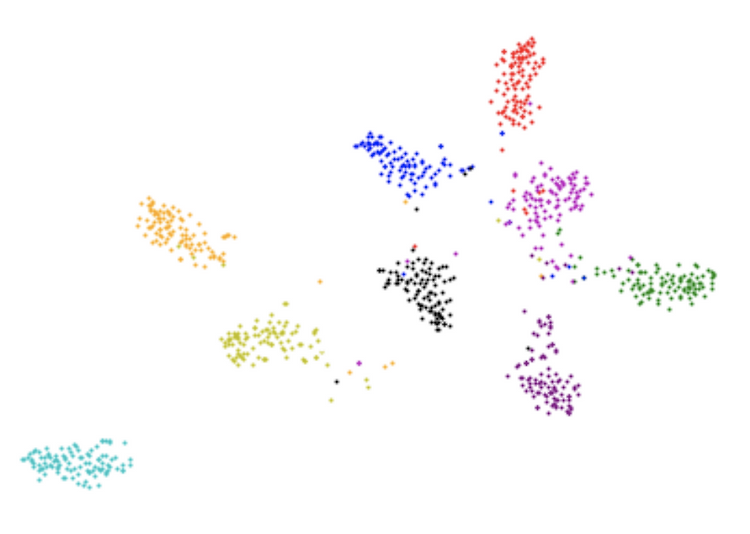

1. Introduction Knowledge Distillation: Dealing with the problem of training a smaller model (Student) from a high capacity source model (Teacher) so as to retain most of its performance. 1.1 Motivation The authors of this paper try to make the outputs of student network be similar to the outputs of the teacher network. Then, they have advocated for a method that optimizes not only the output of..

EagleEye: Fast Sub-net Evaluation for Efficient Neural Network Pruning

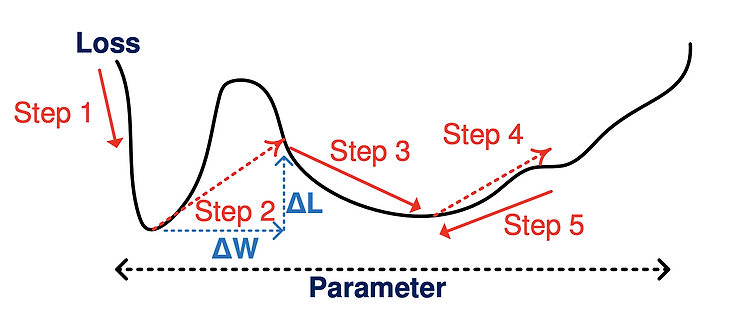

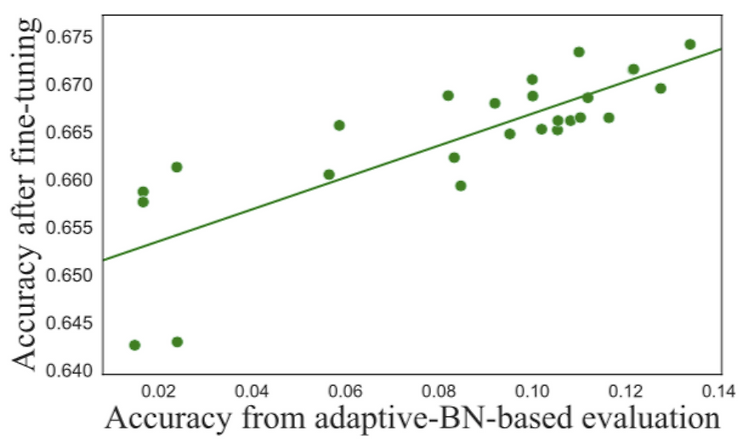

1. Introduction Pruning: Eliminating the computational redundant part of a trained DNN and then getting a smaller and more efficient pruned DNN. 1.1 Motivation The important thing to prune a trained DNN is to obtain the sub-net with the highest accuracy with reasonably small searching efforts. Existing methods to solve this problem mainly focus on an evaluation process. The evaluation process ai..

Data-Free Knowledge Amalgamation via Group-Stack Dual-GAN

1. Goal The goal is to perform Data-free Knowledge distillation. Knowledge distillation: Dealing with the problem of training a smaller model (Student) from a high capacity source model (Teacher) so as to retain most of its performance. As the word itself, We perform knowledge distillation when there is no original dataset on which the Teacher network has been trained. It is because, in real wor..