Counterfactual Explanation Based on Gradual Construction for Deep Networks

·

AI paper review/Explainable AI

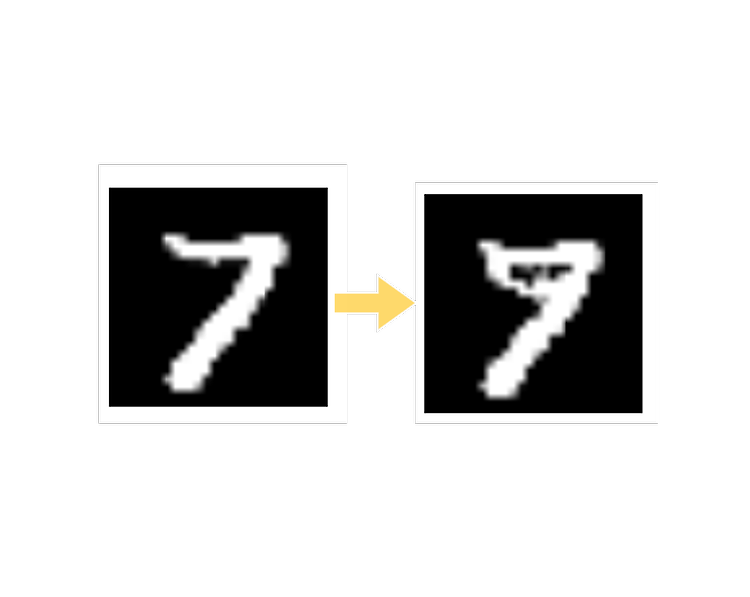

1. Counterfactual Explanation Counterfactual Explanation: Given input data that are classified as a class from a deep network, it is to perturb the subset of features in the input data such that the model is forced to predict the perturbed data as a target class. The Framework for counterfactual explanation is described in Fig 1. From perturbed data, we can interpret that the pre-trained model t..