전체 글

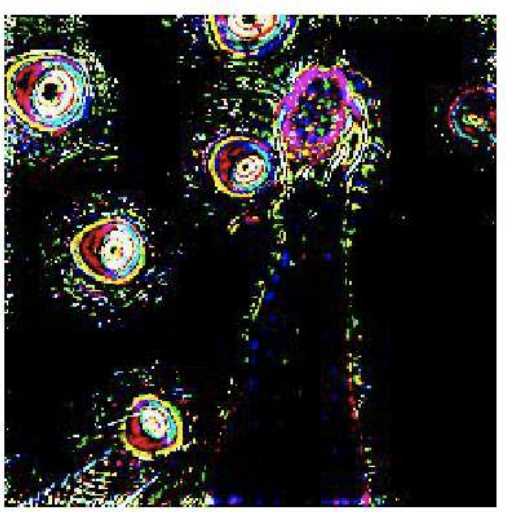

Interpretable And Fine-grained Visual Explanations For Convolutional Neural Networks

1. Goal In Interpretable And Fine-grained Visual Explanations For Convolutional Neural Networks, authors propose an optimization-based visual explanation method, which highlights the evidence in the input images for a specific prediction. 1.1 Sub-goal [A]: Defending against adversarial evidence (i.e. faulty evidence due to artifacts). [B]: Providing explanations which are both fine-grained and p..

Mediapipe (1) - Mediapipe 이해 및 Object detection 예제

1. Mediapipe 란?? Mediapipe는 임의의 sensory data (e.g. images, video streams)에 대해 추론(inference)를 수행할 수 있도록하는 pipeline 구축을 위한 framework입니다. Mediapipe을 통해 Deep Learning(DL) model의 추론 pipeline을 module단위의 여러 요소들로 구성된 graph로 built하게 됩니다. 그래서 mediapipe는 mobile device나 IoT에 최적화된 deployment flow를 제공하게 되므로 on-device에 DL modeld을 deploy하고 싶으면 쓰면 좋을것 같네요. 1.1 Mediapipe 왜 써야하는가?? End-to-End acceleration ML model..

Zero-Shot Knowledge Transfer via Adversarial Belief Matching

1. Data-free Knowledge distillation Knowledge distillation: Dealing with the problem of training a smaller model (Student) from a high capacity source model (Teacher) so as to retain most of its performance. As the word itself, We perform knowledge distillation when there is no original dataset on which the Teacher network has been trained. It is because, in real-world, most datasets are proprie..

Data-Free Learning of Student Networks

1. Data-free Knowledge distillation Knowledge distillation: Dealing with the problem of training a smaller model (Student) from a high capacity source model (Teacher) so as to retain most of its performance. As the word itself, We perform knowledge distillation when there is no original dataset on which the Teacher network has been trained. It is because, in real-world, most datasets are proprie..

Zero-Shot Knowledge Distillation in Deep Networks

1. What is data-free knowledge distillation?? Knowledge distillation: Dealing with the problem of training a smaller model (Student) from a high capacity source model (Teacher) so as to retain most of its performance. As the word itself, We perform knowledge distillation when there is no original dataset on which the Teacher network has been trained. It is because, in real-world, most datasets a..

Interpretable Explanations of Black Boxes by Meaningful Perturbation

1. How to explain the decision of black-box model?? Given the left figure, we wonder that why the deep network predicts the image as "dog". To gratify this curiosity, we aim to find the important regions of an input image to classify it as "dog" class. \[ \downarrow \text{The idea} \] If we find and remove THE REGIONS, the probability of the prediction significantly gets lower. Note: removing th..

GradCAM

1. What is the goal of GradCAM?? The goal of GradCAM is to produce a coarse localization map highlighting the important regions in the image for predicting the concept (class). GradCAM uses the gradients of any target concept (such as "cat") flowing into the final convolutional layer. Note: I (da2so) will only deal with the problem of image classification in the following contents. The property ..